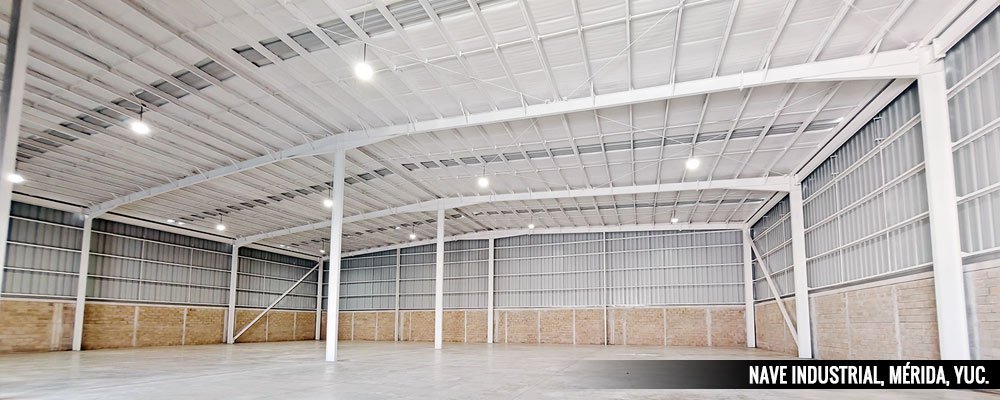

NUESTRO ENFOQUE

Diseñamos y construimos proyectos industriales, comerciales y residenciales brindando soluciones personalizadas con tecnología BIM para maximizar la eficiencia y precisión. Nuestros proyectos son Build-to-Suit, garantizamos resultados de alta calidad que optimizan el retorno de inversión y cumplen con los más altos estándares.

SOLUCIONES INTEGRALES

ALGUNOS CLIENTES